I recently performed at Culturelab in Newcastle as part of the RTV workshops. It was a good opportunity to test out some of my pieces to an audience and also discuss the methods I used to construct them. (some photos of the event here) I’ve since recorded the pieces and put them on youtube and there are some screenshots on Flickr. Ive had a few people ask for some more technical details on how I designed the system. So here is a brief rundown.

The performance system is almost totally automated. This doesn’t mean it is predefined, both the audio and visual components are generative, ie they are variable executions of a pre-trained algorithmic system. For the audio I use Pure Data (PD). This is a visual programming DSP (Digital Signal Processing) system that I have used for years (its an open source alternative to MAX/MSP). I wont go into too much detail on how I program generative audio in, but have posted on this blog before about PD with some downloadable examples etc.

FROM PD

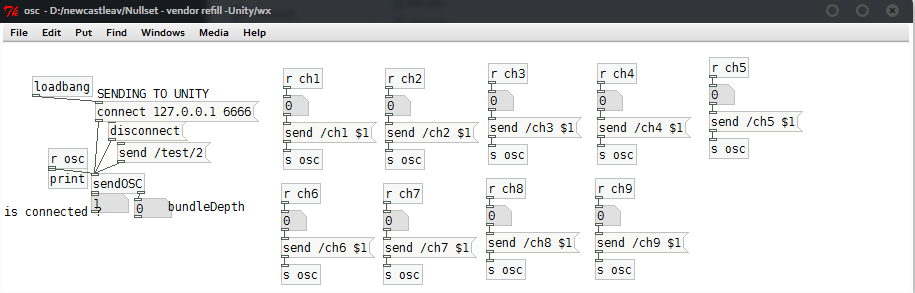

The sub patch in PD that handles sending to osc is shown above. Each ‘song’ consists of a generative audio composition in PD, this sequences and processes an abstract electronic composition in real time, triggering samples, applying effects and playing with time signatures and so on. In most cases the parameters that control the compositional arcs in the audio generation are then sent to Unity via a network messaging service called OSC. (in the above image all the [r ch] & [s osc] boxes are routing these parameters from inside the core of the pd patch to the osc sending part)This allows me to run the two programs in parallel and send specific variables across tcp/ip so Unity can react to changes in the composition. It means that the two aspects could even run on remote machines.

TO UNITY

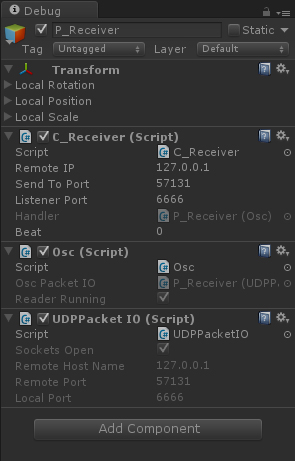

In unity a series of scripts listen to incoming UDP data on the relevant port (6666 in this case) and extracts OSC packets. A message handling script (C_Receiver, shown above left) then processes these and passes the contained values to the various visual processes controlled by other scripts in the scene.

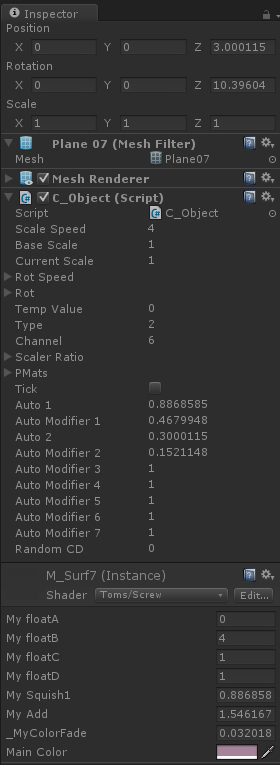

In the image above-right you can see the inspector of a script called C_Object (I know, terrible naming convention). This script is on an object that uses a simple parametric surface shader to produce a specific mesh surface (in this case a screw formula), there are a number of parameters that define the way the surface is constructed within the vertex shader. Variables such as inner radius, wind direction, scale etc. are exposed through the shader material. These values are then updated by the C_Object script, based on incoming OSC messages. The C_Object script can smooth the incoming OSC values to prevent sudden jumps, it can detect large fluctuations (in order to trigger one-shot events) and it can scale the values over time and perform other interesting modulation functions.

SHADERS

Finally here’s an example of the screw vertex shader function.

v2f vert (appdata_base v)

{

v2f o;

o.pos=v.vertex;

o.pos.x*=_MySquish1;

o.pos.x+=_MyAdd;

//screw

float M_u = o.pos.x * 8;

float M_v = o.pos.y * 12.5;

o.pos.x = _MyFloatA * (M_u) * cos(M_v);

o.pos.y = _MyFloatA * (M_u) * sin(M_v);

o.pos.z = _MyFloatA * (M_v) * cos(M_u);

float dist=length(o.pos)*_MyColorFade;

o.color[0]=dist;

o.color[1]=dist;

o.color[2]=dist;

o.color*=_MyColor;

return o;

}

This is based on one of Paul Bourkes formulas, Bourkes pages are an amazing resource for anyone interested in mathematical models of 3d forms. In the current set I also use a version of his supershape code and his spherical harmonics work. Credit also has to go to desaxismundi who converted some of these functions for use in vvvv (another dsp environment I have used). More specifically the surfaces shaders take a simple grid mesh and use a series of trigonometric functions to warp it into mathematical 3d forms. (you can see lots on Bourkes pages). I converted the formulas to work with Unity shaders and then I use these to produce the warping 3d forms you see in the performance videos. This is just one of the shaders, I used a different one for each ‘song’, the others include, vertex noise distortion, superformula, simple scaling & fft analysis and procedural noise. In the tracks i have multiple forms using these shaders, each responding to different track parameters and also effected by their own internal modulators (long term lfos etc)

In addition to the forms being effected/generated in realtime I wrote a series of post process shaders that also respond to incoming OSC signals (bleaching out the screen, causing double vision, pixelation etc). The two work in parallel, the postfx relating to overall track information whereas the forms relate to smaller changes. I was quite pleased with the overall effect, and although much of the technique is classic demoscene work I really enjoy revisiting this sort of coding every now and again.

UPDATE:

I’ve uploaded a zip containing a demo unity project (Unity4, but the scripts are extractable) and a single PD patch you can link up. (NB, some of the code is developed from a bunch of posts on the Unity forums, but i had to alter it a bit to work for me)