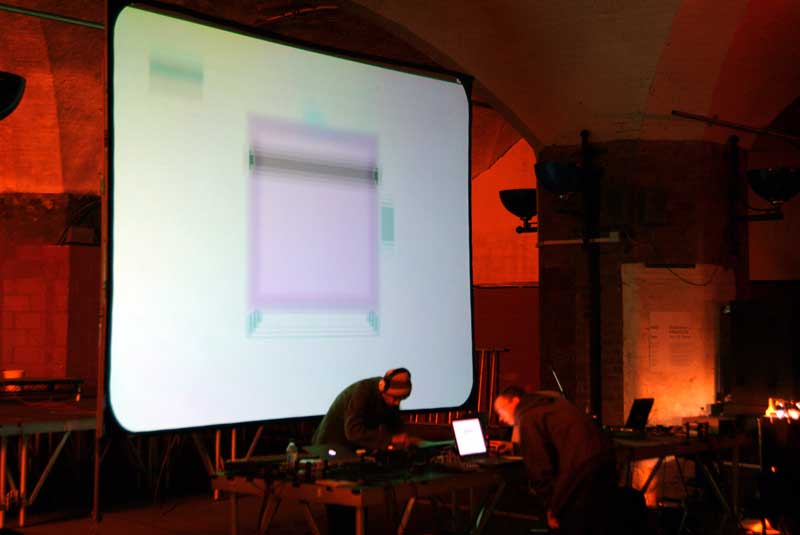

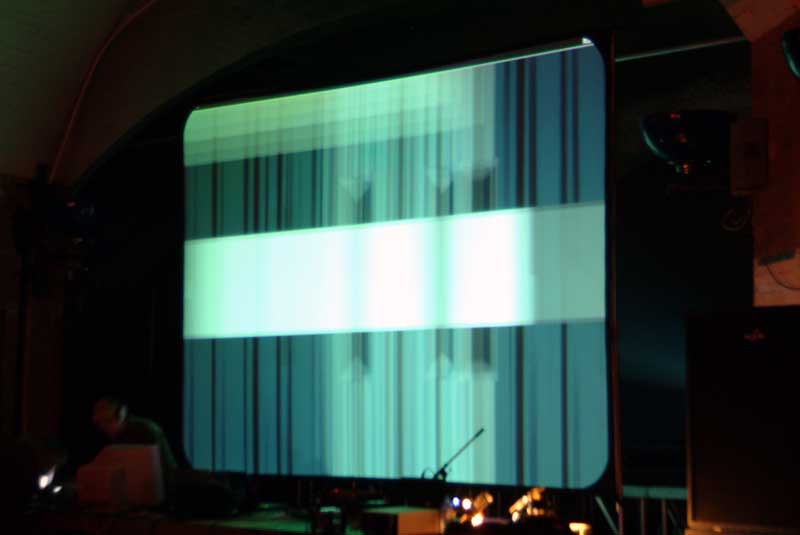

Pixelmap is a system developed to produce simple geometric patterns based on a simple object language. By using a short list of methods and keywords users can trigger and manipulate a large number of graphic elements onscreen. Pixelmap reacts to messages sent via UDP/TCP. This allows the system to work across networks and operate remotely from the machine that is the triggering source. Any application can be the source for these messages, as long as it supports UDP/TCP methods (such as Max,Pd,Supercollider).

The intention is for the system to allow composers to accompany their performances with realtime generated graphics. These can be scripted/generated algorithmically in much the same way that the performance can be. Instead of reacting to sound like most common FFT based audio-visual systems Pixelmap allows animation to be designed and performed alongside an audio composition. Using an application such as PD performers can send generative signals to Pixelmap, allowing realtime variations in the animation (i.e. the processes generating Pixelmap messages can be totally independent of any audio processes).

One of the aims of this project is to allow music visuals to be much more closely linked with the audio (as opposed to the current trend of disassociated image driven VJ systems). In Pixelmap, note (or rather object) polyphony and modifiers can be assigned, much as in a traditional synthesiser. This allows direct correlation between audio objects and their visual representation, using one-to-one data mapping as opposed to FFT analysis data (although FFT data can also trigger Pixelmap objects!).

Although I haven’t updated this project for sometime I still thought it was worth documenting for completeness sake.